Handle massive volumes of media—one customer was able to ingest up to 2,000 video hours per hour while reducing costs by 30–60% compared to traditional pipelines.*

Coactive Multimodal AI Platform

A full-stack platform for multimodal intelligence—purpose-built for the scale, precision, and control

media & entertainment demands.

Coactive Multimodal AI Platform Core Capabilities

Manage and activate catalogs across image, video, and audio modalities.

Automate multimodal tagging, enrich libraries, streamline QA, and scale discovery.

Search using natural language, concepts, or content cues.

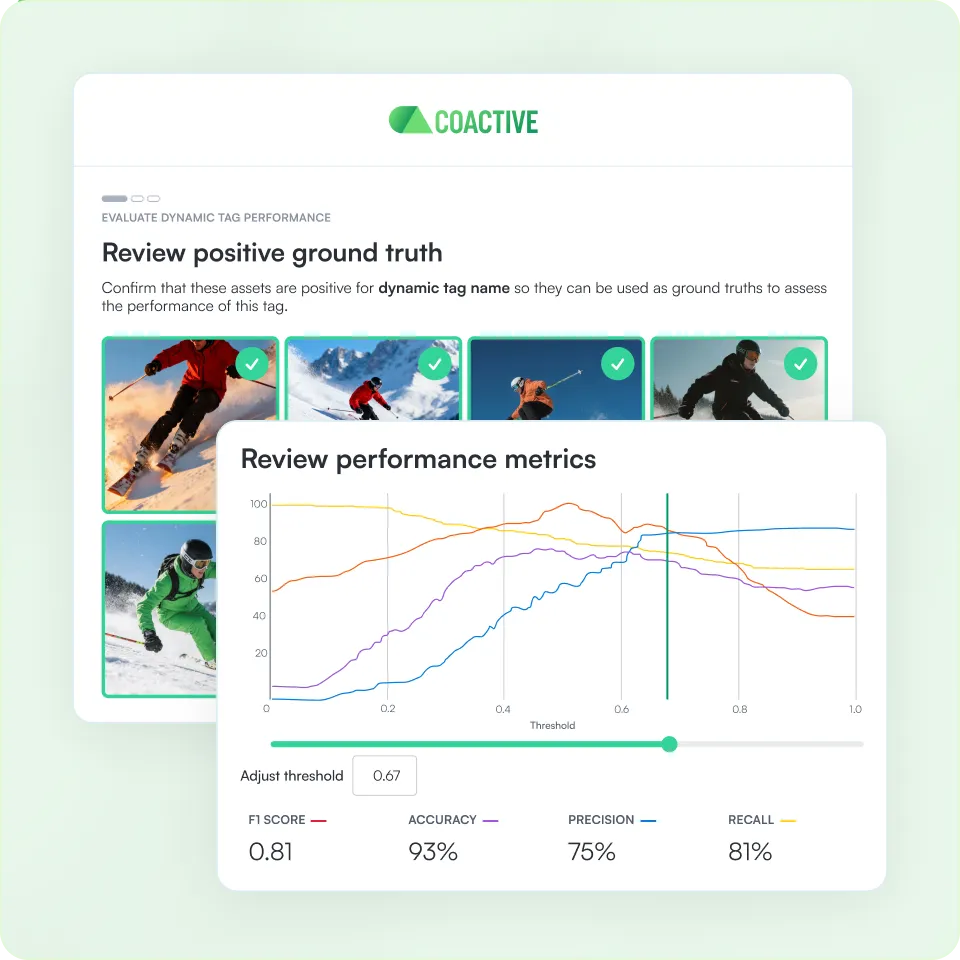

Monitor drift, compare versions, validate precision–recall, and approve production thresholds.

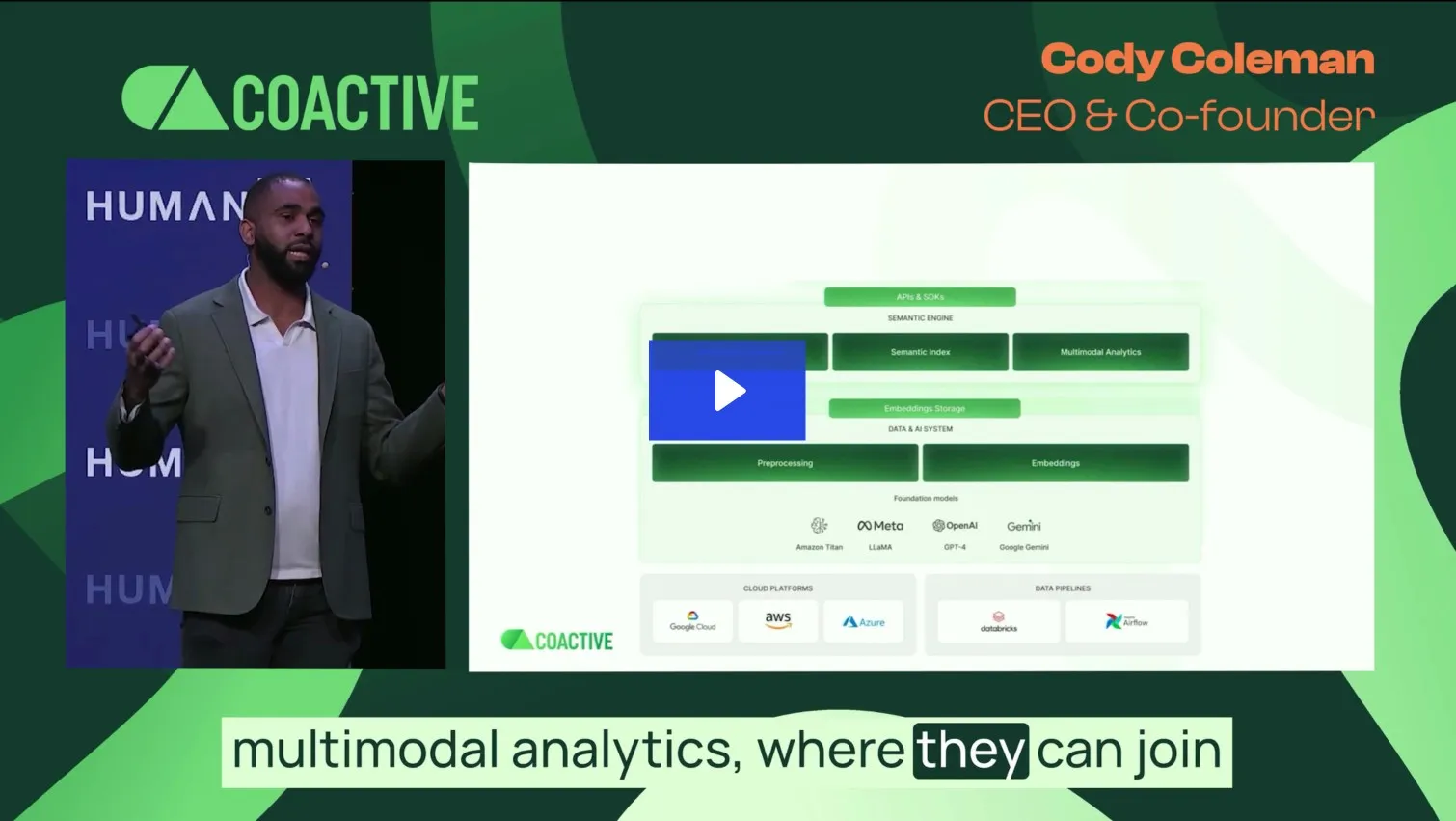

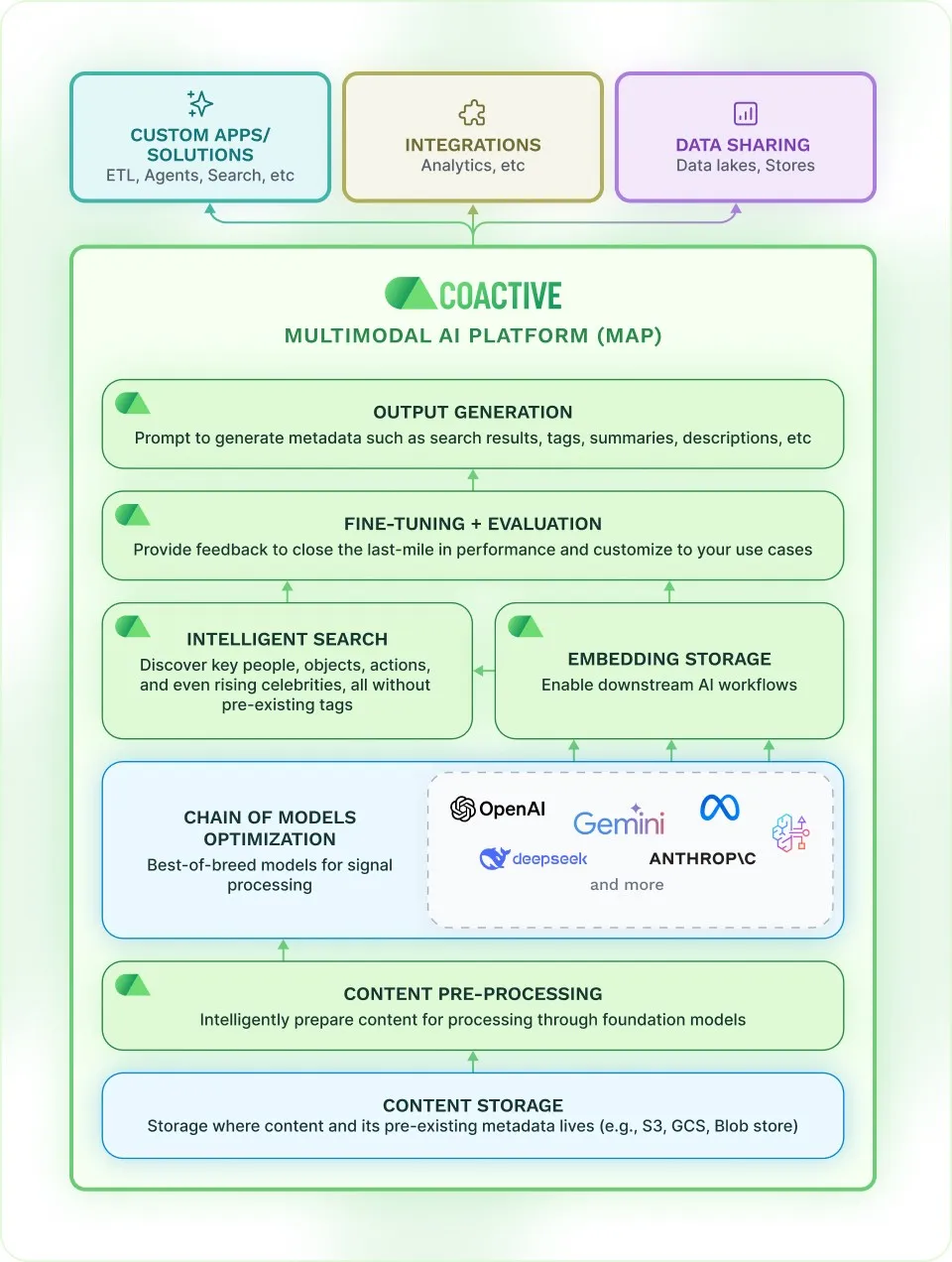

Multimodal AI Platform (MAP)

Coactive provides a single place to manage and activate content catalogs across image, video, and audio. The platform handles the heavy lifting so you can focus on building your app’s product logic and iterating.

Coactive segments video into shots and audio intervals—laying the groundwork for rich, semantic tagging and search capabilities. No reprocessing needed, content stays reusable, searchable, and ready for evolving workflows.

Use Coactive’s open model catalog, or plug in models via Bedrock, Azure AI, or Databricks. Chain models for deeper insights.

Custom fit to your domain needs with fine-tuning that’s decoupled, evaluable, and built for iteration.

Turn unstructured video, image, and audio into rich, time-aligned, searchable content—so you can find exactly what matters, fast.

Robust, specific tagging that can power ad targeting, content customization, personalization, and more.

*Based on an actual customer experience. Ingestion performance is dependent on specific conditions, and your experience may vary.

AI-powered Metadata

Automatically tag content across video and audio assets. Structure and enrich content libraries to power personalized recommendations and improve content discoverability for end users.

Fuse semantic signals from visual, spacial audio, and dialogues to generate precise, context-aware tags that support discovery, suitability, and reuse.

Speed up evaluation with AI-powered prompt suggestions, tag preview, and threshold tuning.

Eliminate the manual burden of creating evaluation datasets by automatically generating pseudo-ground truth using LLMs, so that you can have confidence in tag accuracy.

Unlike other platforms and cloud providers, you can fine-tune repeatedly using stored embeddings—no per-run pricing or forced reprocessing.

Reuse your refined taxonomy across datasets—scaling tagging without redundant effort.

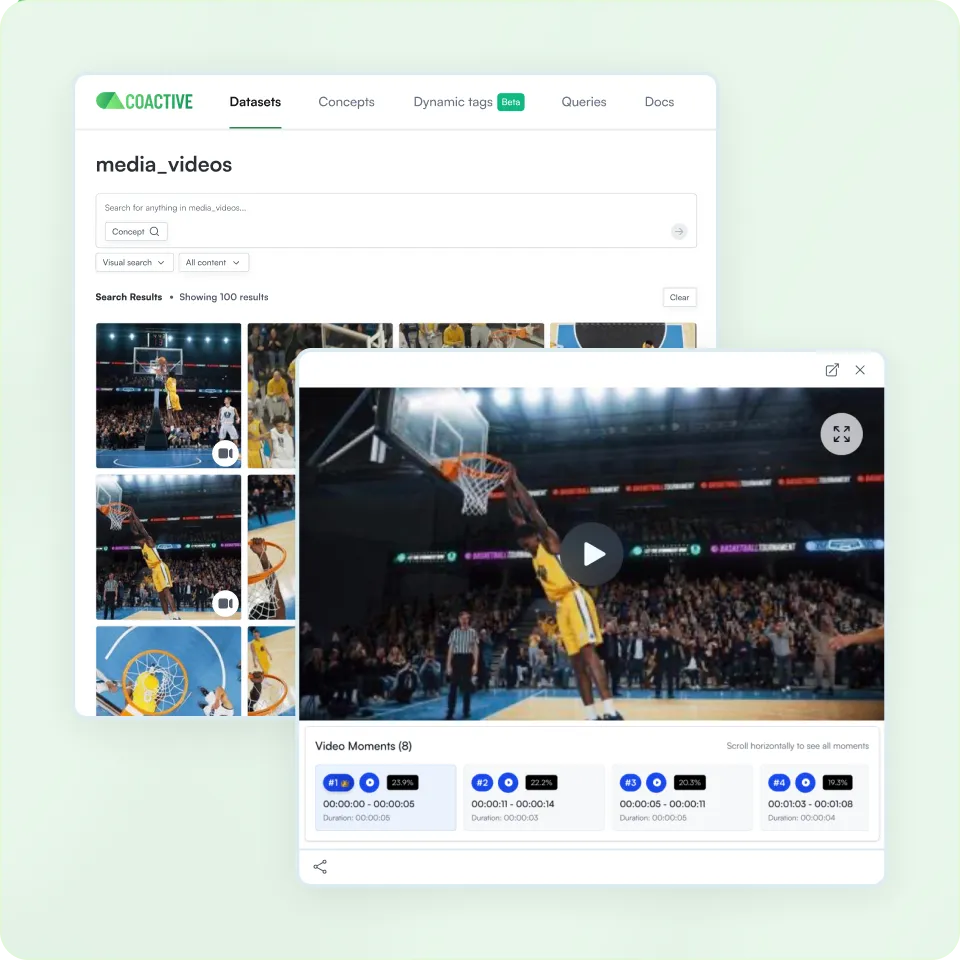

Semantic Search

Search video, image, and audio using natural language. Coactive’s semantic signals deliver more accurate, intent-aligned results.

Search returns full videos anchored to the most relevant moment, with the ability to explore other moments tied to your query.

Fine-tune Coactive to detect the concepts that matter to you—like brand cues in ads, key characters in content, sensitive scenes for safety review, or custom tags for media operations.

Enhance search with existing titles, episodes, and series information from your DAM—no extra tagging required. Don’t have a DAM? No problem, Coactive can apply it for you.

Surface the right clips faster, with fewer off-target results to sift through.

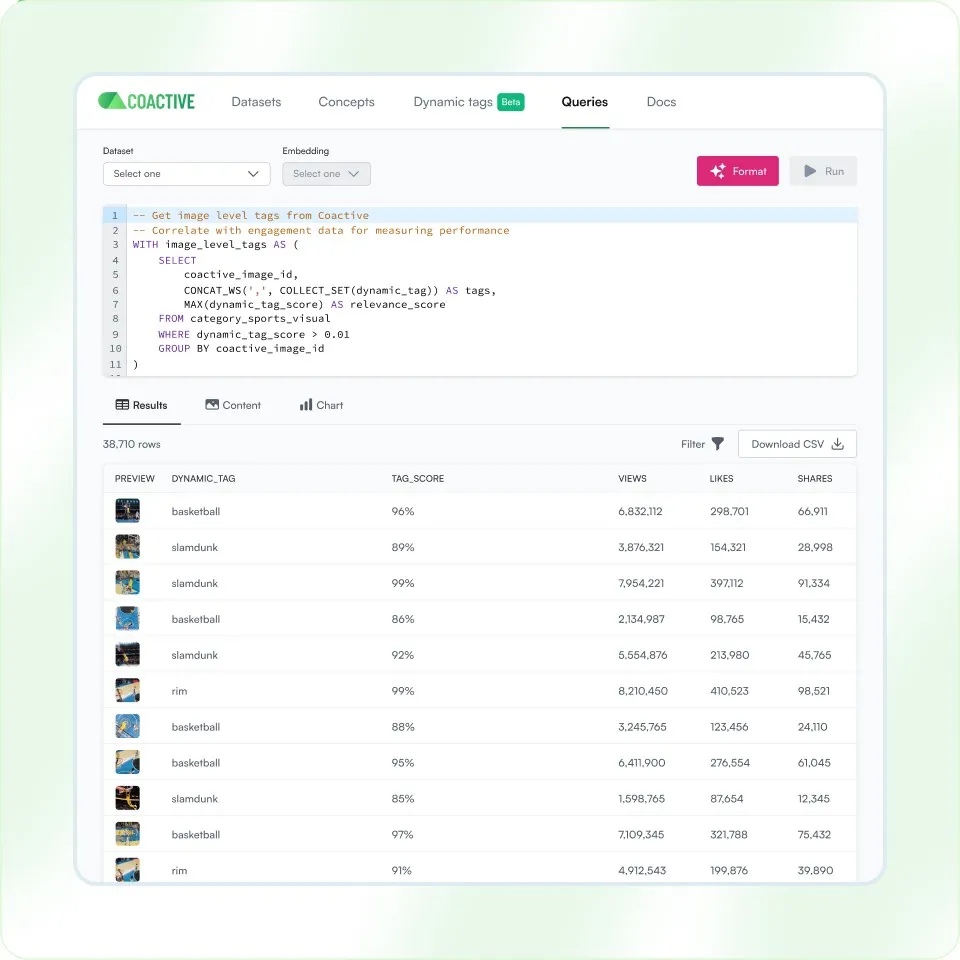

Advanced Analysis

Coactive’s SQL analysis capabilities let teams query, filter, and evaluate large-scale visual datasets—enabling precise, programmatic insights across content performance, behavior, and risk.

Query visual and transcript data using SQL across systems via Coactive’s analytics API. Leverage metadata from Dynamic Tags and Concepts for rich, cross-modal analysis.

Analyze content engagement, user search patterns, ad performance, and content risk—filtering by thresholds, tag scores, or aggregated video-level signals.

Export raw tag scores and probabilities to S3, Python, R, Excel, or PySpark for F1 scoring, experiments, and threshold tuning.