Handle massive volumes of media—one customer was able to ingest up to 2,000 video hours per hour while reducing costs by 30–60% compared to traditional pipelines.*

Power your video and image apps the easy way

The Coactive Multimodal AI Platform is a powerful, flexible way for developers to create game-changing apps, fast.

Working with video and images is hard. We make it simple.

It’s expensive, time consuming, and downright maddening to develop apps based on video and images. And multimodal foundation models only get you to square 1. That’s why there’s Coactive.

We built a complete stack, so that you don’t have to. Easily find the perfect moment, automatically create robust and specific metadata, and easily understand what’s in your content library.

Our optimized platform handles massive scale at speed. Reusable embeddings minimize long-term compute and storage costs.

You’re not locked in to any one foundation model. Choose the right model for your needs, and chain models for deeper understanding.

Multimodal AI Platform (MAP)

Coactive provides a single place to manage and activate content catalogs across image, video, and audio. The platform handles the heavy lifting so you can focus on building your app’s product logic and iterating.

Coactive segments video into shots and audio intervals—laying the groundwork for rich, semantic tagging and search capabilities. No reprocessing needed, content stays reusable, searchable, and ready for evolving workflows.

Use Coactive’s open model catalog, or plug in models via Bedrock, Azure AI, or Databricks. Chain models for deeper insights.

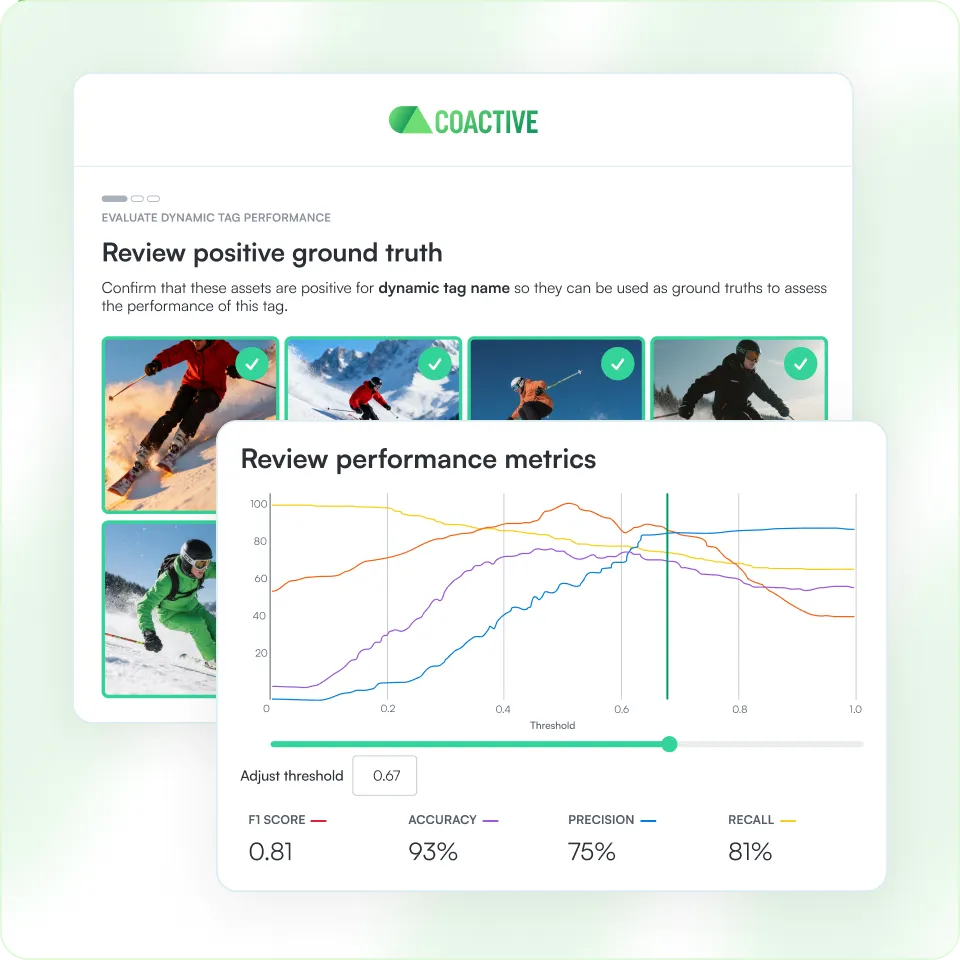

Custom fit to your domain needs with fine-tuning that’s decoupled, evaluable, and built for iteration.

Turn unstructured video, image, and audio into rich, time-aligned, searchable content—so you can find exactly what matters, fast.

Robust, specific tagging that can power ad targeting, content customization, personalization, and more.

*Based on an actual customer experience. Ingestion performance is dependent on specific conditions, and your experience may vary.

Fast ingestion at scale. Smart, too.

Handle massive volumes of media with automatic chunking into meaningful segments, embedding, and lineage tracking for trust, and reproducibility.

Ingest petabytes of video and images across cloud or on-prem storage. Coactive is architected to handle large-scale, concurrent processing with minimal bottlenecks.

Automatically splits video and audio into meaningful segments (shots and dialogue intervals) to enable precise analysis without overloading compute.

Unlike other platforms that stop at just marking shot boundaries, Coactive enables context-aware analysis across segments.

Every asset and transformation is traceable—down to the version of each model used. This ensures consistent outputs across iterations and full auditability.

Pick your foundation model winner

Coactive lets you pick from a broad portfolio of multimodal foundation models, so you can use the best one for your needs. Chain models for deeper insights.

Access a growing library of hosted open-source and proprietary models, all pre-integrated and ready for deployment.

Integrate your preferred closed or fine-tuned models through frameworks like AWS Bedrock, Azure AI, and Databricks. Maintain full control over what runs where.

Design flexible inference pathways by chaining models together. For example, run lightweight captioning first, then escalate to deeper multimodal analysis only when needed.

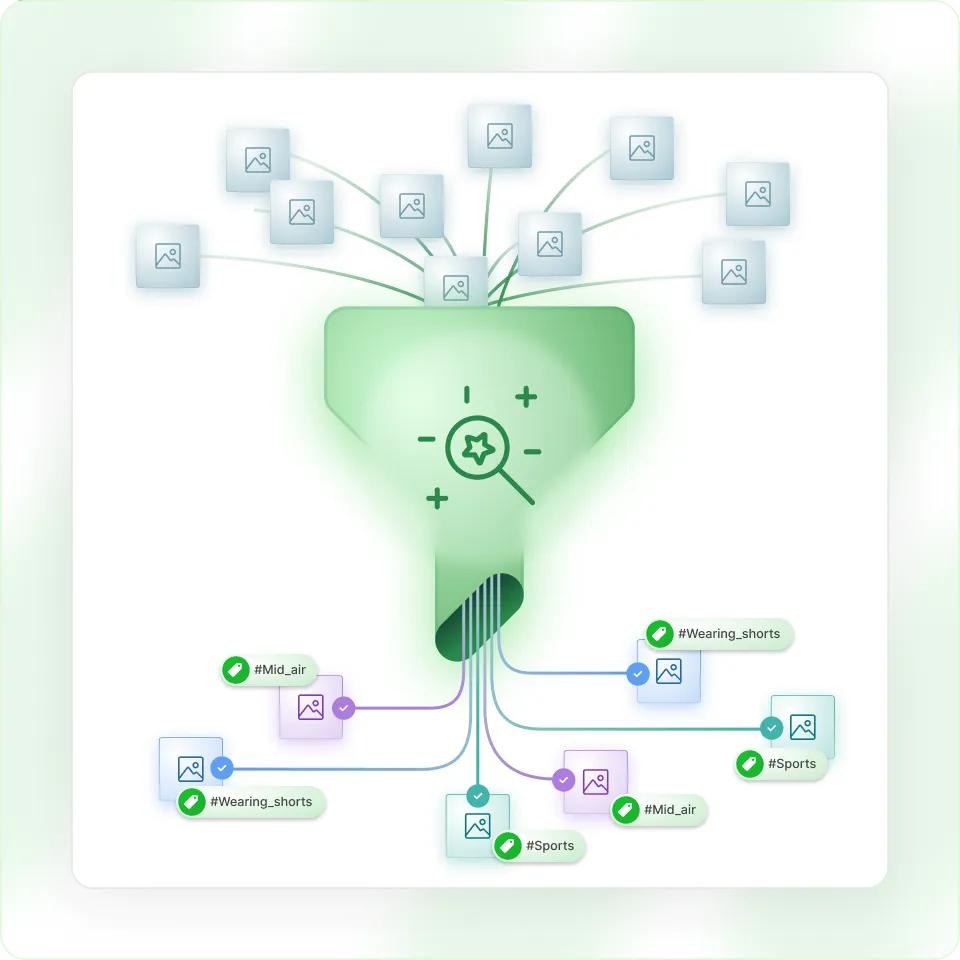

Find the right asset, quickly and accurately

Coactive turns unstructured video, image, and audio into rich, time-aligned, searchable content—so you can find exactly what matters, fast.

With a unified semantic search layer, you can search across all assets—whether tagged by lightweight captioning models or deeper multimodal pipelines—through a single, consistent API.

Query visual, audio, and transcript signals simultaneously to locate specific moments, patterns, or concepts. Results are time-aligned and traceable back to source assets.

Support both natural language and structured SQL-style queries to power editorial tools, creative review workflows, and automated routing systems.

Learn more about content discovery with Coactive.

Get robust metadata in minutes

Turn visual and audio content into structured metadata—automatically and at scale.

Tag assets using natural language prompts with frame- to video-level precision. Start with zero-shot, refine with examples—no model training required.

Extract objects, scenes, dialogue, and segments across video, image, and audio—all aligned to your custom metadata structure.

Query results via SQL or API. Coactive’s model-agnostic architecture integrates with your stack and separates metadata from embeddings for faster, cheaper performance.

The Smart, Layered Approach to Content Intelligence

Flexibility