Coactive AI Series B Funding Round

Over the past decade, we've seen an unprecedented surge in image and video content. Yet, enterprises have struggled to do much more than store it, making it more of a tax (or liability) than an asset. We founded Coactive in 2021 to fix that. Coactive unlocks the untapped potential of images and videos for applications ranging from intelligent search to video analytics – no metadata or tags required, creating an enterprise-grade operating system for visual content. Instead of tedious and expensive tagging/labeling, we leverage multimodal AI in a highly scalable and secure way to pull context directly from the pixels and audio in content, giving data, marketing, and product teams superpowers! Since our launch last year, tech-enabled enterprises from Fortune 500 retailers, media & entertainment companies, and community platforms have chosen Coactive to create intelligent applications that generate value from their massive volumes of unstructured visual content.

But this is only the beginning. Today we’re excited to announce we are accelerating the path to a no metadata future with $30 million in Series B funding, cementing our position as the leading platform for analyzing images and videos. Cherryrock Capital, in its first investment, co-led this funding round with Emerson Collective. They were joined with significant participation from Greycroft, and previous investors Andreessen Horowitz, Bessemer Venture Partners, and Exceptional Capital, all participating in this raise.

"We are thrilled to be in partnership with Cody, Will, and the entire Coactive team. We believe they are using the most advanced technology for unstructured data to solve real customer problems. We look forward to bringing our operational expertise to help them scale as they build a world class multimodal AI company." Stacy Brown-Philpot, Co-founder & Managing Partner, Cherryrock Capital

While computer systems have led to massive paradigm shifts in the past from digital transformation to the big data movement, visual data (images and videos) have remained elusive despite making up more and more of everyday life. The way we work, communicate, shop and are entertained are all increasingly visual thanks to the rise of video conferencing, social media, and e-commerce and streaming services. Even the web looks very different now than it did back in the 1980s and 1990s. Images and videos are everywhere, yet our systems have been blind to those changes.

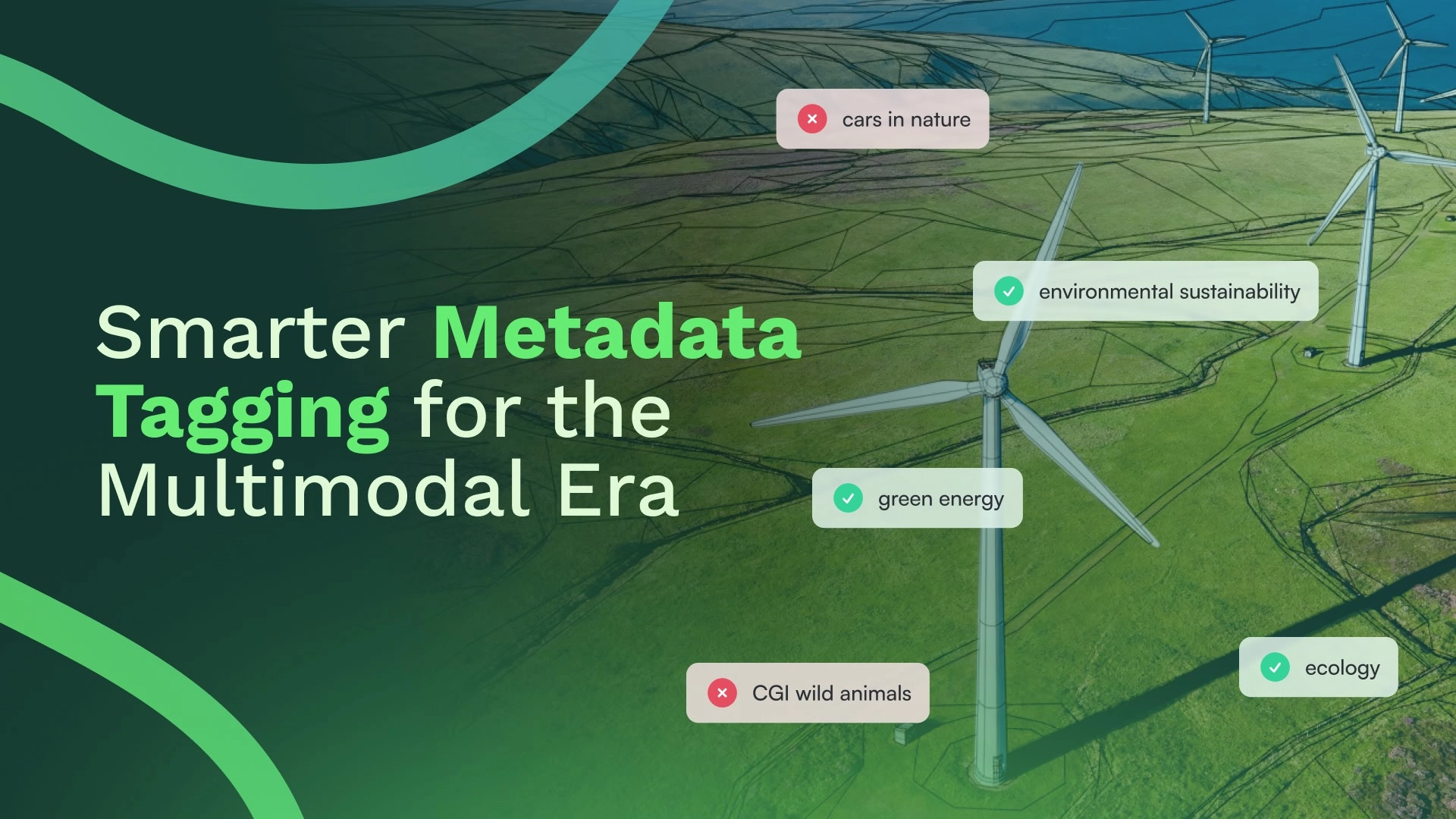

Enterprises are stuck in a world of tag, load, search (TLS), where they need to tag raw assets with either human or machine annotations, load those annotations into their systems as metadata, and search based on those annotations. That process is slow, expensive, and inflexible and does not scale to the image and video content we have today. And with the rise of Generative AI, we won’t be able to keep up anymore.

Coactive flips the process on its head with a load, search, tag (LST) approach. With our platform, enterprises can load and index the raw images and videos directly and make them searchable – no metadata or tags required. Tags are only necessary for enterprise specific terminology and domain-specific ideas where precision matters or for backward compatibility into metadata systems. We do all of the heavy lifting in a scalable and secure way to understand the pixels and audio directly, giving enterprise a new set of superpowers. Find clips in less than a second, not hours. Develop a sixth sense to detect content that violates standards & practices or editorial guidelines. Cover breaking news before AI can generate it. And turn raw video files from a tax to an asset that generates new revenue streams. This transition from TLS to LST is akin to ETL (Extract, Transform, Load) to ELT (Extract, Load, Transform) in data handling and processing and represents a fundamental leap forward like automobiles were to horses.

Coactive’s platform allowed full customization to match our unique guidelines. And, in just four weeks, 88% of our manual labeling was automated," notes Florent Blachot, VP of Data Science & Engineering at Fandom. "Coactive allows Fandom to improve the user safety of our wiki communities while reducing cost.” Blachot’s words underscore our capability to deliver substantial performance and cost-saving outcomes.

Bringing the perfect combination of data, systems, and AI together to make this transition has been something that only technology companies with deep machine learning expertise could even attempt – until now. At Coactive, we set out to democratize AI by creating a Multimodal Application Platform (MAP) that enables any enterprise to build intelligent applications in a scalable and secure way – no metadata or PhDs required. Over the past year, our simple, powerful web application and APIs have supercharged both technical and non-technical teams to search and review content like never before. Today, we are writing the next chapter in the Big Data movement with our MAP for navigating oceans of unstructured data and bringing the full power of analytics to image and video content.

If you are looking to leverage AI to supercharge how your company works with visual content, get started today!